MPI vs. OpenMP in 2025: Where Each Wins

A practical guide to choosing between message passing and shared-memory parallelism for modern HPC and hybrid nodes.

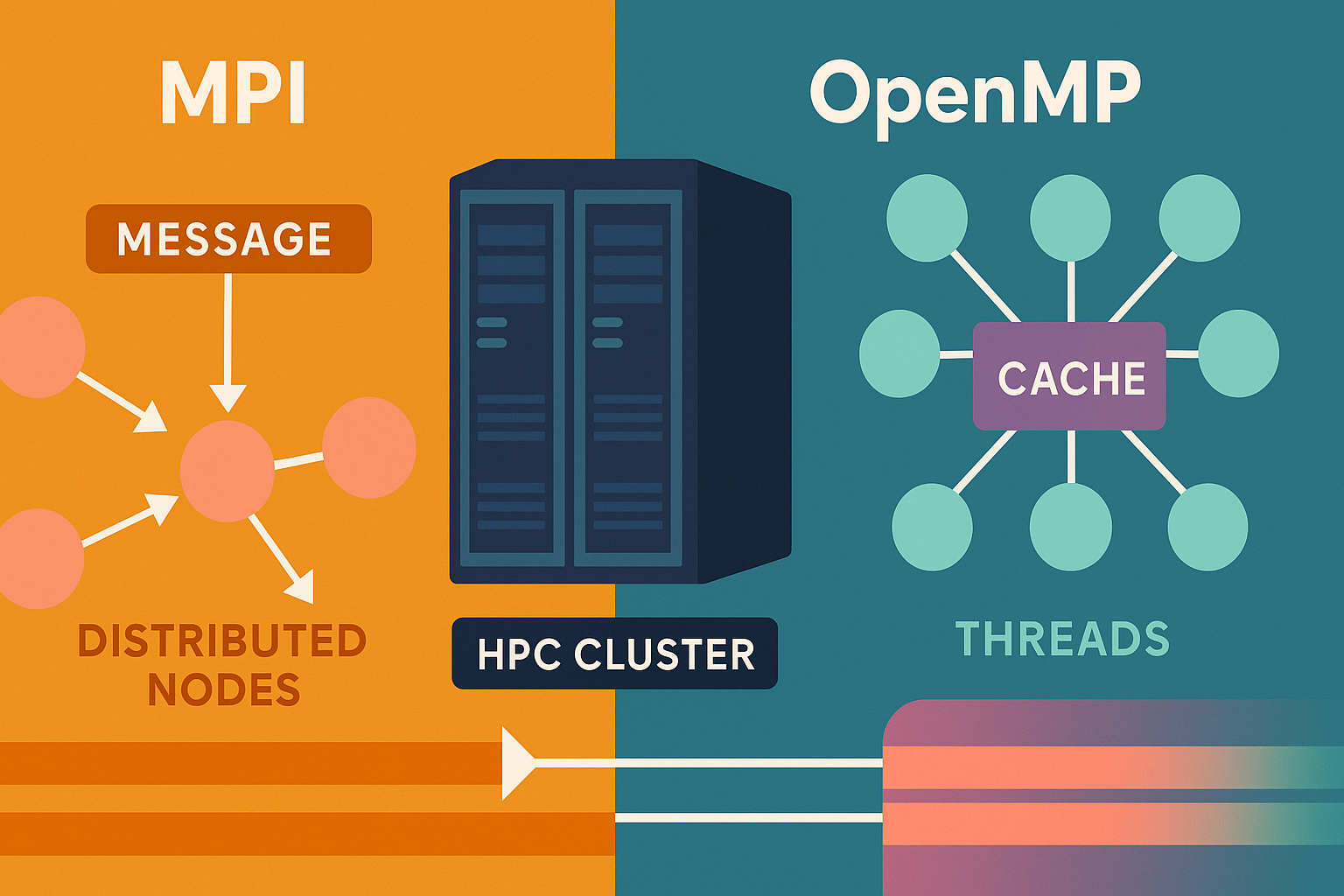

Modern clusters have fat nodes (many cores, large memory) and fast interconnects. That’s why hybrid patterns—MPI between nodes and OpenMP within a node—are common.

When MPI wins

- Distributed memory by necessity: datasets exceed a node’s RAM.

- Clear data ownership and minimal sharing.

- Coarse-grained decomposition with small surface/volume ratio.

When OpenMP wins

- Shared-memory parallel loops and tasks with modest synchronization.

- NUMA-aware data placement still local to a node.

- Rapid prototyping and incremental parallelization of CPU-bound kernels.

Hybrid design tips

- Bind MPI ranks to NUMA domains; spawn OpenMP threads within each. Avoid oversubscription.

- Use non-blocking collectives (MPI_Iallreduce) to hide latency.

- Pin memory and leverage huge pages where available.

- Profile: check MPI time vs. compute vs. OpenMP overhead; fix the biggest slice first.

Example: OpenMP reduction vs. MPI allreduce

// OpenMP reduction inside a rank

#pragma omp parallel for reduction(+:sum)

for (int i = 0; i < n; ++i) sum += a[i] * b[i];

// Then global reduce across ranks

MPI_Allreduce(&sum, &global, 1, MPI_DOUBLE, MPI_SUM, MPI_COMM_WORLD);

Rule of thumb: start simple (single model) and add hybrid complexity only when measurements show you must.

1. Background & Evolution

The Message Passing Interface (MPI) emerged in the early 1990s to unify disparate vendor-specific libraries (PVM, NX, Express). Its design goals: portability, performance transparency, and a comprehensive set of point-to-point and collective operations for distributed-memory architectures. OpenMP appeared later (1997+) as a directive-based standard enabling incremental parallelization of shared-memory (single process, multiple threads) applications—initially focusing on Fortran DO loops, then expanding to C/C++ and more complex constructs.

From 2005–2015, cluster nodes scaled core counts (multi-socket NUMA), GPUs began dominating floating-point throughput, and memory hierarchies deepened (L1/L2/L3, HBM, device memory). By 2025 modern HPC nodes combine:

- Dozens to hundreds of CPU cores (x86_64, ARM Neoverse, RISC-V prototypes) across NUMA domains.

- Multiple GPUs or accelerators (NVIDIA Hopper/Blackwell, AMD MI3x, Intel Max) with >3 TB/s aggregate on-device bandwidth.

- High Bandwidth Memory (HBM) stacks plus conventional DDR5 DIMMs; sometimes CXL-attached memory pools.

- High-speed interconnects (InfiniBand NDR/XDR, HPE Slingshot, Ethernet w/ RoCE v2) enabling sub-microsecond NIC latency with GPU-direct paths.

Hybrid programming is a response to this heterogeneity: MPI expresses distributed ownership across nodes; OpenMP (or other intra-node models) exploits shared memory parallelism within a node or even within an accelerator’s logical cores (e.g., CPU side pre/post processing). Both ecosystems have evolved—MPI adding neighborhood collectives, non-blocking collectives, persistent operations, partitioned communication; OpenMP adding tasks, task dependencies, SIMD, memory allocators, target offload, and detach semantics.

The central question in 2025 is no longer “MPI or OpenMP?” but “Where is the seam between distributed and shared memory responsibilities, and how do we balance concurrency, memory bandwidth, latency hiding, and programmability?”

2. Execution & Memory Models Deep Dive

MPI Execution Semantics

An MPI program is an SPMD (Single Program, Multiple Data) execution of N ranks. Each rank has its own address space; communication is explicit via MPI calls. Key semantics:

- Ordering: Point-to-point operations follow matching rules, not strict FIFO for mismatched tags/sources. Message ordering is defined per (source, tag, communicator) pair.

- Progress: Some MPI implementations require the application to enter the library (e.g., via

MPI_Test,MPI_Wait) to advance outstanding communications; others provide asynchronous progress threads or NIC offload. - Memory Model: No global shared memory; data movement is explicit. MPI-3 RMA (Remote Memory Access) introduces windows for one-sided puts/gets with memory ordering epochs (fence, lock/unlock, PSCW, flush). Still, semantics revolve around explicit synchronization.

OpenMP Execution Semantics

An OpenMP program begins as a single initial thread. Parallel regions create a team of threads; worksharing constructs (for, sections) divide iterations. More advanced semantics:

- Memory Model: Based on a relaxed consistency with flush operations (implicitly inserted at certain constructs) establishing ordering. Data scoping clauses (shared, private, firstprivate, reduction) control variable visibility.

- Tasks: Units of work with potential dependencies forming a directed acyclic graph; runtime schedules tasks potentially out-of-order respecting dependencies.

- SIMD & Vectorization:

#pragma omp simdconveys to the compiler vectorization is safe, controlling reductions and alignment. - Target Offload:

target teams distribute parallel formaps league-of-teams + threads to accelerator execution hierarchies.

Comparative Implications

| Aspect | MPI | OpenMP |

|---|---|---|

| Memory Isolation | Strict (separate processes) | Shared address space |

| Failure Scope | Rank failure often fatal (ULFM in progress) | Thread failure undefined |

| Communication Cost Model | Latency/ bandwidth + matching overhead | Mostly shared loads/stores, coherence & NUMA effects |

| Synchronization | Collective / point-to-point calls | Barriers, atomics, locks, task dependencies |

| Overheads | Context switch (process) + message protocol | Runtime scheduling, false sharing, synchronization |

| Scaling Limit | Memory per rank; network contention | Memory bandwidth per socket; Amdahl on serial sections |

MPI exposes costs explicitly; OpenMP hides some costs (cache coherence, false sharing) requiring profiling to reveal them.

Memory Hierarchy Impacts

In MPI, first-touch is rank-local by construction. In OpenMP, first-touch placement is crucial to avoid remote NUMA traffic; one should initialize large arrays inside a parallel region bound to threads pinned to target NUMA domains. For hybrid codes, allocate large distributed arrays per rank (NUMA aligned), then thread-parallelize inner loops.

3. Communication Patterns & Costs

Latency vs. Bandwidth (Micro & Macro)

Point-to-point time for a message of size m can be approximated as:

T(m) ≈ α + β·m + γ·contention

Where α is startup latency, β inverse bandwidth, γ accounts for queuing or serialization on the network. For small control messages, α dominates; for large halo exchanges, β·m dominates.

Collective Algorithms

| Collective | Small Message Algorithm | Large Message Algorithm | Notes |

|---|---|---|---|

| Broadcast | Binomial tree | Pipelined chain / scatter-allgather | Topology-aware variants reduce hop count |

| Allreduce | Recursive doubling | Ring / Rabenseifner | Ring saturates bandwidth; tree minimizes latency |

| Alltoall | Pairwise exchange | Pairwise (same) with pipelining | Hierarchical for multi-level networks |

| Allgather | Recursive doubling | Ring | Hybrid algorithms switch by size |

Modern MPI libraries choose algorithms via message size thresholds and topology introspection (e.g., UCX, OFI providers). GPU-aware collectives extend these choices (e.g., NCCL ring, tree, CollNet) integrated via MPI interop.

Neighborhood Collectives

Stencil / graph workloads benefit from MPI_Ineighbor_alltoallw to express sparse exchange patterns, reducing software overhead compared to repeated point-to-point postings.

OpenMP Synchronization Costs

Implicit barriers at end of parallel for may dominate when loop bodies are short. Using nowait or tasks with dependencies can mitigate. False sharing inflates coherence traffic when adjacent cache lines are updated by different threads; padding or array-of-structs → struct-of-arrays transformations help.

Modeling Overlap

Overlap potential = (Communication Time – Overlappable Fraction) – Computation Slack. Non-blocking collectives (MPI_Iallreduce) or task-based compute scheduling can hide part of α & β·m. Effective overlap demands progress (either hardware or dedicated thread) and enough independent work.

4. NUMA, Affinity & Topology Awareness

Why Affinity Matters

Memory bandwidth per socket is finite; cross-socket traffic costs higher latency and lower effective bandwidth. Poor thread placement can degrade performance >30% on bandwidth-bound kernels.

Tools & Techniques

- hwloc / lstopo: Visualize topology (sockets, NUMA nodes, cores, caches, GPUs, NICs).

- numactl / taskset: Launch-time pinning for MPI ranks.

- OpenMP Proc Bind / Places:

OMP_PROC_BIND=close/spreadcontrol thread distribution relative to parent. - MPI Rank Mapping:

--map-by ppr:4:socket(Open MPI) or--bind-to coreensure even distribution.

First-Touch & Page Migration

Initialize arrays in the parallel context used for computation. Page migration (e.g., automatic NUMA balancing) may introduce jitter; disable if predictable locality outperforms dynamic heuristics.

Measuring Locality

Use performance counters (e.g., mem_load_retired.local_dram vs. remote_dram) or vendor profilers. High remote traffic suggests mis-pinning or irregular access.

Hybrid Strategy Example

Assign one MPI rank per NUMA domain (or per GPU) and spawn OpenMP threads confined within that domain. This reduces lock contention and keeps memory accesses local.

5. Tasking & Asynchrony (OpenMP) vs. Non-Blocking (MPI)

OpenMP Tasks

Tasks allow irregular parallelism (adaptive mesh refinement, graph traversals). Dependency clauses (depend(in:...)) create edges so runtime schedules when inputs are ready. taskloop partitions iteration spaces adaptively. detach supports asynchronous completion (e.g., integrated with I/O or device operations).

MPI Non-Blocking & Persistent Ops

Non-blocking (MPI_Isend, MPI_Irecv, MPI_Iallreduce) enable the program to return immediately and perform useful work before MPI_Wait. Persistent collectives (MPI-4) pre-initialize communication schedules reducing per-call setup overhead.

Integrating Tasks & MPI

Approach: Create a task for posting non-blocking communication, another task for dependent compute, and a task for completion wait. Careful to avoid early waits that serialize. Some runtimes integrate progress when a task yields.

Progress Considerations

If the MPI implementation lacks asynchronous progress, prolonged compute tasks starve message completion. Solutions: dedicate a communication thread; use MPI_Test polling tasks; enable progress threads (build or env variable).

Choosing Mechanism

| Need | OpenMP Tasking | MPI Non-Blocking |

|---|---|---|

| Fine-grained dynamic DAG | Strong | Weak |

| Wide-area data movement | N/A | Strong |

| Overlap compute & comm | Moderate (with polling) | Strong |

| Offload integration | Via target tasks | GPU-aware collectives |

The hybrid: OpenMP tasks schedule CPU compute & data staging while MPI transfers halos concurrently.

6. Hybrid Patterns Catalog

- Flat MPI: One rank per core (or hardware thread). Pros: Simplicity, explicit control. Cons: Memory overhead per rank, pressure on network endpoints, replicated metadata.

- MPI + OpenMP Threads: One rank per NUMA domain or socket; threads exploit shared caches. Reduces MPI envelope size; risk of false sharing & load imbalance.

- MPI + OpenMP Tasks: Adds dynamic scheduling inside rank for irregular algorithms (sparse factorization, adaptive meshes).

- MPI + OpenMP Target (GPU): CPU orchestrates data movement; kernels executed via OpenMP target constructs. Limited maturity vs. native CUDA/HIP but improving portability story.

- Process-per-GPU + Intra-Process Threads: Each rank bound to a GPU; OpenMP threads handle host staging, pre/post processing pipelines.

- Hierarchical Collectives: Intra-node reduction via shared memory + inter-node MPI reduction reduces network load (two-level schemes). Libraries sometimes auto-detect; manual optimization possible for custom data layouts.

- Hybrid Nested Parallelism: Outer level across MPI ranks; inner OpenMP parallel sections for separate pipeline stages (I/O, compute, compression) overlapping.

Trade-offs revolve around memory footprint, load balance, and latency hiding capacity.

7. Integration with Accelerators

Data Movement Paths

- Host Staging: GPU memory → host pinned buffer → NIC → remote host → remote GPU. Adds latency and consumes PCIe bandwidth.

- GPU-Direct RDMA: NIC reads/writes GPU memory directly; reduces latency & host CPU overhead.

- Peer-to-Peer (NVLink/XGMI): Intra-node GPU transfers bypass host memory.

OpenMP Target Offload Considerations

OpenMP target data regions keep allocations resident; use_device_ptr or is_device_ptr help interoperate with custom kernels. Mapping overhead can dominate short kernels; batch small kernels or fuse loops.

MPI GPU-Aware Communication

If MPI is GPU-aware, pass device pointers directly. If not, explicit staging is needed; encapsulate in utility functions to minimize code duplication.

Overlapping Compute & Transfers

Launch asynchronous GPU kernels (e.g., via vendor runtime or OpenMP nowait target) followed by non-blocking MPI using already available data; meanwhile process previous batch’s results on CPU.

Packing/Unpacking

Non-contiguous halos often require packing. Techniques: custom CUDA kernels, omp target teams distribute loops writing into contiguous buffers, or derived MPI datatypes (host side) when staging.

Memory Footprint & NUMA

Pin host staging buffers local to the NIC’s NUMA node to reduce QPI/Infinity Fabric traffic. On multi-rail systems, distribute buffers by rail.

8. Performance Modeling & Scaling Laws

Amdahl & Gustafson Revisited

Amdahl: Speedup ≤ 1 / (S + P/N) where S is serial fraction. In hybrid codes S includes: initialization, I/O, sequential phases, synchronization overhead (global barriers, collectives). Gustafson scales problem size with N making effective serial fraction shrink—provided memory capacity or bandwidth scales proportionally.

Communication-Avoiding Strategies

Blocking algorithms replaced with s-step (batched) variants reduce collective frequency: perform k local compute steps, aggregate updates once. Trade-off: extra local memory/register pressure vs. fewer high-latency ops.

LogP & Extensions

LogP parameters (L latency, o overhead, g gap, P processors) highlight that reducing message count (amortizing overhead o) can dominate optimizing peak bandwidth. For GPU-aware paths, effective o shrinks but g may increase under PCIe contention.

Roofline Hybrid View

Attainable performance bounded by min(Compute Peak, Memory BW × Operational Intensity, Network BW × Distributed Operational Intensity). Introduce Distributed OI = flops / bytes communicated per time-step. Raising local arithmetic intensity or fusing halo exchanges improves distributed OI.

Strong vs. Weak Scaling Diagnostics

| Symptom | Possible Cause | Probe | Mitigation |

|---|---|---|---|

| Flattening speedup (strong) | Communication dominated | Vary message size synthetic test | Aggregate messages, overlap, topology-aware placement |

| Super-linear speedup (weak) | Cache effects | Hardware counters | Accept; document scaling window |

| Increased variability | OS jitter / imbalance | Timeline traces | Pin interrupts, rebalance workload |

| Memory-bound plateau | Insufficient BW per rank | Stream triad vs. peak | Adjust rank/thread mapping, optimize layout |

Performance Counters & Tracing

Combine MPI profiling (PMPI) to capture call durations with OMPT callbacks for task events, merging timelines (e.g., via OTF2) to spot overlap failure.

9. Debugging & Tooling

Deadlock Patterns

- Wildcard receives (

MPI_RecvwithMPI_ANY_SOURCE) matched unexpectedly, causing circular waits. - Mismatched collectives (rank skips a collective path).

- Ordering assumptions with non-blocking ops (Wait posted in wrong sequence).

Tools

| Tool | Layer | Use Case |

|---|---|---|

| PMPI Wrappers | MPI | Instrument call durations / arguments |

| OTF2 / Score-P | MPI + OpenMP | Unified trace timeline |

| Intel VTune / AMD uProf | CPU | Memory bandwidth, hotspots |

| Nsight Systems | GPU + MPI | Overlap visualization |

| OMPT Interface | OpenMP | Task / thread events |

| Thread Sanitizer (partial) | OpenMP | Data race detection (limited for tasks) |

Race & Data Issues

OpenMP races: missing reduction clause, improper private vs firstprivate, false sharing due to contiguous scalar array updates. MPI ordering bugs: mixing blocking send with wildcard receive.

Reproducibility

Record environment (OMP settings, MPI rank mapping, GPU clock state). Small differences (turbo states) affect scaling curves.

10. Case Study: 3D Stencil (Hybrid)

Problem

Compute heat diffusion over a 3D grid (Nx×Ny×Nz) for T time steps. 7-point stencil each iteration. Domain decomposed in 3D across MPI ranks; each rank holds sub-block plus halo layers.

Baseline (Flat MPI)

Each rank updates interior, then exchanges 6 face halos via blocking sends/receives. Performance issues: idle time waiting for halos; small messages for thin faces (cache-unfriendly packing).

Hybrid Transformation

- One MPI rank per NUMA domain; OpenMP

parallel for collapse(2)over y,z planes per x-slab. - Overlap: Post non-blocking halo exchanges (

MPI_Irecv/Isend) early, compute deep interior while halos in flight, then compute boundary. - Introduce tasking: Create tasks for each face boundary update dependent on completion of corresponding halo receive requests.

GPU Offload Variant

Offload interior update to GPU; concurrently CPU packs halos and initiates MPI transfers using GPU-direct RDMA after device-to-device synchronization event.

Performance Outcomes

| Version | Time/Step | Network Idle % | Memory BW Utilization | Notes |

|---|---|---|---|---|

| Flat MPI | 1.0 (norm) | 22% | 65% | Baseline |

| Hybrid Threads | 0.78 | 14% | 72% | Better cache locality |

| Hybrid + Overlap | 0.63 | 5% | 74% | Interior/boundary overlap |

| Hybrid + Tasks | 0.59 | 4% | 75% | Task latency hiding |

| Hybrid + GPU | 0.31 | 6% | 68% (host) | GPU compute dominated |

Relative speedups demonstrate benefit stacking; diminishing returns after GPU introduction due to communication still serial fraction.

11. Common Pitfalls & Anti-Patterns

- Over-Decomposition: Excessively small MPI subdomains inflate surface/volume ratio, increasing halo overhead.

- Oversubscription: More threads than hardware contexts—context switch overhead + cache thrash.

- Eager Protocol Thrash: Many small messages trigger eager path saturating NIC; coalesce or aggregate.

- False Sharing: Adjacent frequently updated scalars on same cache line; pad or restructure.

- Implicit Barriers Everywhere: Default

parallel forbarrier slows pipeline patterns; usenowaitwhere correctness allows. - Poor Rank Mapping: Not aligning ranks with physical topology causes extra hops; leverage topology hints.

- Pinned Memory Exhaustion: Overuse of pinned buffers reduces pageable memory available; pool them.

- Ignoring Progress: Non-blocking ops without periodic test/wait lead to delayed completion.

- Premature Hybridization: Complexity increases debugging cost; measure before refactor.

- Unbalanced Task Graphs: Single long-running task serializes completion; split or add granularity controls.

12. Tuning Checklist

| Category | Check | Tool / Metric | Action |

|---|---|---|---|

| Affinity | Threads/ranks pinned? | hwloc-ps, perf counters | Adjust binding policy |

| Memory BW | Achieve ≥85% STREAM? | STREAM, VTune | Re-layout, align, prefetch |

| Communication | Large fraction time in MPI? | MPI profile % | Overlap, reduce calls, compress |

| Collectives | Dominant call? | PMPI trace | Switch algorithm / size thresholds |

| Load Balance | Straggler ranks exist? | Timeline | Domain redistribution |

| Tasks | Long idle thread time? | OMPT trace | Adjust grain size / cut tasks |

| GPU Utilization | <50% SM active? | Nsight | Kernel fusion, better batching |

| Halo Packing | High pack time? | Profiler region | Vectorize pack/unpack kernels |

| NUMA Traffic | High remote misses? | perf, numastat | Re-pin, first-touch init |

| Barriers | Many short loops w/ barrier | Trace | Add nowait or tasks |

| Allocations | Many small mallocs | heap profiler | Pool / arena allocator |

13. Future Directions (2025+)

- MPI Sessions & Partitioned Communication: Decouple initialization from

MPI_COMM_WORLD, enabling modular components and better startup scaling; partitioned send/recv encloses large transfers into sub-operations with partial completion signaling (useful for streaming large arrays out incrementally). - Endpoints / ULFM: Fault tolerance extensions (User Level Failure Mitigation) aimed at surviving rank failures without global abort—a necessity at exascale where MTBF drops.

- OpenMP Memory Spaces & Allocators: Finer control of placement (HBM vs. DDR vs. CXL). Dynamic adaptation to bandwidth pressure.

- CXL Disaggregation: Memory pooling could soften constraints of per-node DRAM, altering domain decomposition heuristics.

- PGAS Convergence: Hybrid models mixing MPI with unified PGAS abstractions (e.g., MPI+OpenMP+OpenSHMEM or GASNet-based runtimes) to reduce boilerplate for irregular remote data access.

- Energy-Aware Scheduling: Integrating power/energy metrics into runtime decisions (DVFS adjustments per phase).

- Autotuning Orchestration: ML-driven selection of thread counts, message aggregation thresholds, collective algorithms at runtime.

14. References

- MPI Forum. MPI: A Message-Passing Interface Standard (v4.1 Draft).

- OpenMP Architecture Review Board. OpenMP Application Programming Interface (5.2).

- Rabenseifner, R. Optimization of collective reduction operations.

- Hoefler, T., Schroeder, C., et al. Latency vs. bandwidth trade-offs in collective algorithms.

- Dagum, L., Menon, R. OpenMP: An industry standard API for shared-memory programming.

- Williams, S., et al. Roofline: An insightful visual performance model.

- Balaji, P., et al. MPI on many-core architectures: design challenges.

- OpenMP TR: Tasks and Dependencies Extensions.

- NVIDIA NCCL Documentation (2025) for GPU collective patterns.

- ULFM Working Group Proposals (2024–2025 revisions).