Sealing the Supply Chain: Zero-Trust Build Pipelines That Scale

An engineer’s map for rebuilding the software supply chain around zero-trust principles without stopping delivery.

The day we discovered a compromised dependency in our build pipeline felt like waking up to find the locks on our headquarters replaced. The package arrived through a trusted mirror, signed with a familiar key, yet embedded a subtle time bomb. We escaped without production impact thanks to layered defenses, but the scare pushed us to redesign our build system around zero trust. This article chronicles that transformation—technical and human—from ad hoc pipelines to hermetic, verifiable, auditable delivery.

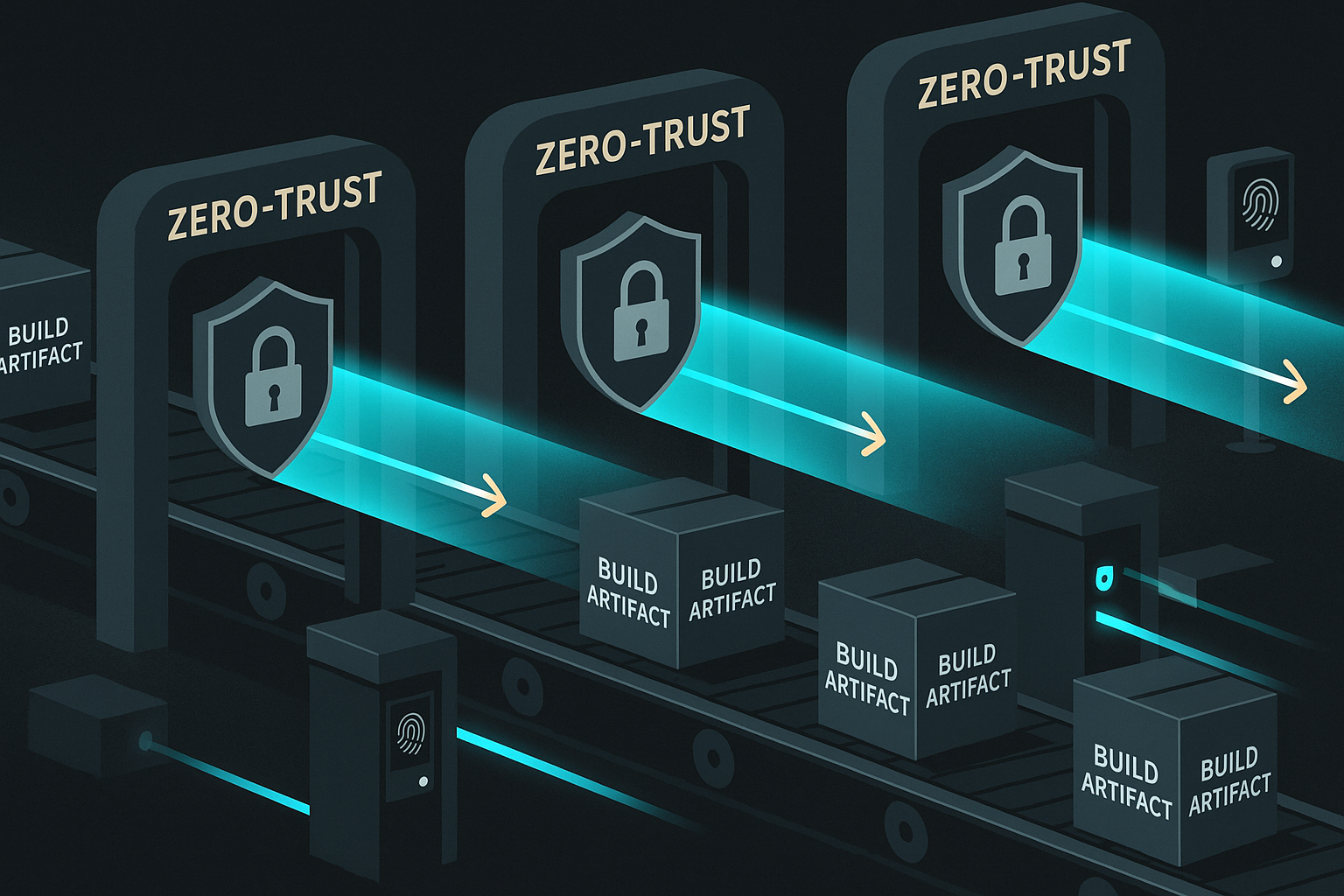

Zero trust for build systems means every actor proves identity, every artifact carries provenance, and no step assumes benevolence. We’ll explore how we designed policy, implemented reproducible builds, secured secrets, monitored behavior, and collaborated with developers to keep velocity while tightening defenses.

1. Threat model reset

We began with a threat modeling workshop involving SREs, security engineers, and developers. We mapped attack vectors:

- Compromised dependencies (typo-squatting, malicious updates).

- Insider threats (abusing CI tokens, modifying build scripts).

- Infrastructure breaches (compromised runners, cloud credentials).

- Supply-chain attacks (tampering with source repositories, build outputs).

We scored likelihood and impact, focusing on feasible attacks. We recognized that trust boundaries extended beyond internal networks; every dependency, SaaS integration, and human action could be weaponized. The outcome was a threat model document guiding priorities.

2. Principles of zero-trust pipelines

We codified principles:

- Authenticate everything: humans, services, dependencies.

- Authorize minimally: least privilege, scoped tokens, time-bound permissions.

- Verify continuously: checksums, signatures, policy enforcement at each step.

- Observe deeply: comprehensive logging, anomaly detection, tamper-evident storage.

- Automate response: rapid revocation, rollback, and communication.

These principles anchored design reviews and tooling decisions.

3. Architecture overview

Our pipeline now flows through stricter stages:

- Source control: signed commits, protected branches, mandatory reviews.

- Dependency intake: curated artifact proxies with security scanning and provenance verification.

- Hermetic builds: reproducible builds in isolated environments with pinned inputs.

- Signing and attestation: cryptographic signing of outputs and SLSA-compliant attestations.

- Policy enforcement: deployment gates verifying signatures, checksums, and policy compliance.

- Runtime verification: continuous monitoring of deployed artifacts against SBOMs.

4. Source integrity

We mandated commit signing (GPG or SSH certificates). CI refuses unsigned commits. Branch policies require two reviews for security-sensitive repos. We integrated secret scanning to reject commits containing credentials. Merge queues ensure deterministic builds—once approved, commits land via automated merges, reducing risk of human tampering.

5. Dependency hygiene

Dependencies now flow through an internal artifact repository. We mirror upstream packages after running:

- Malware scanning (static analysis, sandbox execution).

- License checks.

- Provenance validation: verifying upstream signatures, comparing to known-good checksums.

We generate SBOMs for each dependency set. Developers request new packages via a review process, providing justification and maintenance plan. The repository enforces version pinning—no floating tags. Periodic “dependency hygiene” sprints update packages while running compatibility tests in staging.

6. Hermetic build environments

Builds run inside sealed containers with read-only base images. Our build orchestrator provisions short-lived VMs with no outbound internet access; dependencies enter via pre-populated caches. Builds consume pinned inputs (Git commit, dependency versions, environment configuration). We capture environment digests: OS version, kernel, compiler flags. The goal: same inputs produce same outputs.

We validated reproducibility by running builds twice and comparing outputs bitwise. Discrepancies triggered investigations. This forced us to eliminate non-determinism (e.g., timestamps embedded in archives, random seeds). Tools like strip-nondeterminism and dettrace helped.

7. Secrets management

Secrets no longer live in build configs. We use a dedicated secrets manager issuing short-lived tokens via workload identity. CI jobs authenticate using OIDC tokens bound to specific repositories and branches. Environment variables containing secrets expire immediately after use. We log every secret access, storing logs in append-only storage. Developers never see production secrets; they receive scoped credentials for staging only.

8. Artifact signing and attestations

Upon successful build, we sign artifacts with hardware-backed keys stored in FIPS-certified HSMs. Signatures follow Sigstore/Pivotal. We also generate SLSA Level 3 attestations capturing:

- Build provenance (who triggered, commit hash).

- Build steps (commands, environment).

- Dependency digests.

- Test results.

Attestations store in an immutable ledger and attach to artifacts in the registry. Deployments verify signatures and attestations before proceeding. If anything fails, deployment halts with actionable errors.

9. Policy engine and verification

We built a policy engine powered by Open Policy Agent (OPA). Policies enforce requirements: artifacts must carry valid signatures, SBOM must pass vulnerability scans, dependencies cannot exceed risk thresholds, tests must meet coverage. Policies run at CI completion, artifact publishing, and deployment. We treat policy failures as blocking; engineers receive notifications with remediation steps.

10. Vulnerability management

SBOMs feed into a vulnerability scanner that checks for CVEs. Findings triage by severity. Critical vulnerabilities trigger immediate pipeline blocks until resolved or formally waived with compensating controls. We integrated with vendor feeds and community bulletins. False positives are documented and revisited. Automated notifications inform teams of newly discovered CVEs affecting their components.

11. Behavioral analytics

Zero trust extends to monitoring pipeline behavior. We analyze CI logs for anomalies: unusual build durations, unexpected network connections (should be none), rare commands. Machine learning models flag deviations from historical patterns. Alerts route to security on-call. We also monitor commit patterns, detecting unusual signing keys or contributions from compromised accounts.

12. Developer experience

Security cannot stall delivery. We invested in tooling to keep developers productive:

- CLI utilities to check policy locally:

zt build verifyruns the same OPA policies offline. - Friendly error messages linking to documentation.

- Automated code fixes for common issues (e.g., generating SBOM metadata).

- Caching hermetic environments to reduce build times.

We run office hours and embed security engineers within product teams to co-design solutions.

13. Rollout strategy

We migrated incrementally. Phase 1 tackled critical services, running old and new pipelines in parallel. We compared outputs, aligning reproducibility. Phase 2 expanded to all repositories, with temporary exceptions for exotic toolchains. Phase 3 enforced blocking policies. Along the way, we tracked metrics: build time, failure rate, developer satisfaction. Feedback loops refined tooling.

14. Incident response integration

When alerts fire, we follow documented response plans. Build artifacts include metadata for quick tracing: we can answer “which build produced this binary?” within seconds. Revocation workflows rotate keys, purge caches, and disable compromised accounts. We rehearse supply-chain incident drills quarterly, simulating compromised dependencies to validate detection and containment.

15. Case study: malicious dependency attempt

During rollout, a researcher intentionally uploaded a modified dependency to test defenses. The artifact repository rejected it after signature verification failed. The event triggered alerts, security triaged, and we documented response. The drill proved our controls worked end-to-end—detection, alerting, communication, and resolution executed within 12 minutes.

16. Metrics and outcomes

- Build reproducibility exceeded 99.2%; remaining variance traced to legacy components scheduled for rewrite.

- Build times increased by 12% initially but dropped to 4% overhead after caching optimizations.

- Security incidents related to CI/CD dropped to zero in the first six months.

- Audit compliance improved; external auditors praised end-to-end traceability.

17. Cultural shifts

Zero trust changed culture. Developers now treat supply-chain security as part of definition of done. Code reviews include checklists for provenance. Security updates share context, celebrating teams that reduce risk. We added training modules on signing keys, policy debugging, and SBOM literacy. Hackathons explore new tooling like automated provenance visualization.

18. Future work

We’re experimenting with verifiable builds using transparency logs (Rekor) to publish attestations publicly. We’re piloting confidential builds inside TEEs to protect against rogue infrastructure admins. We’re collaborating with partners on shared provenance standards. We also plan to integrate runtime attestation—verifying that running processes match signed binaries via TPM measurements.

19. Lessons learned

- Security improvements must pair with ergonomics; otherwise, teams bypass them.

- Transparency (dashboards, logs) builds trust in automation.

- Practice drills reveal gaps better than paperwork.

- Zero trust is iterative—threats evolve, so must controls.

20. Conclusion

Rebuilding the build system around zero trust demanded patience, tooling, and cultural humility. We now deliver software with higher confidence, backed by cryptographic provenance and vigilant monitoring. Developers ship faster because they trust the pipeline. Our supply chain isn’t invincible, but it’s resilient, observable, and ready for whatever the internet throws next.