Seeing in the Dark: Observability for Edge AI Fleets

A practitioner's guide to instrumenting, monitoring, and debugging machine learning models running at the edge.

Our edge AI deployment began with a handful of pilot devices in retail stores. Within months, thousands of cameras, sensors, and point-of-sale terminals joined the fleet. They detected shelves running low, predicted queue lengths, and flagged suspicious transactions. But when a customer called asking why a device misclassified bananas as tennis balls, we realized our observability blurred at the edge. Logs vanished into the ether, metrics arrived sporadically, and models drifted silently. This article shares how we built observability robust enough for flaky networks, sensitive data, and autonomous updates.

1. The observability problem at the edge

Edge devices live in the wild. Networks drop, power flickers, and physical tampering occurs. Traditional observability assumes reliable connectivity and homogeneous infrastructure. Edge AI introduces variability: different hardware (ARM, x86, NVIDIA Jetson), varying sensors, diverse models, and location-specific regulations. We needed a system that:

- Captures metrics, logs, traces, and model telemetry offline.

- Streams data efficiently when connectivity returns.

- Respects privacy and bandwidth constraints.

- Correlates events across device, model, and cloud services.

- Enables remote debugging and fast incident response.

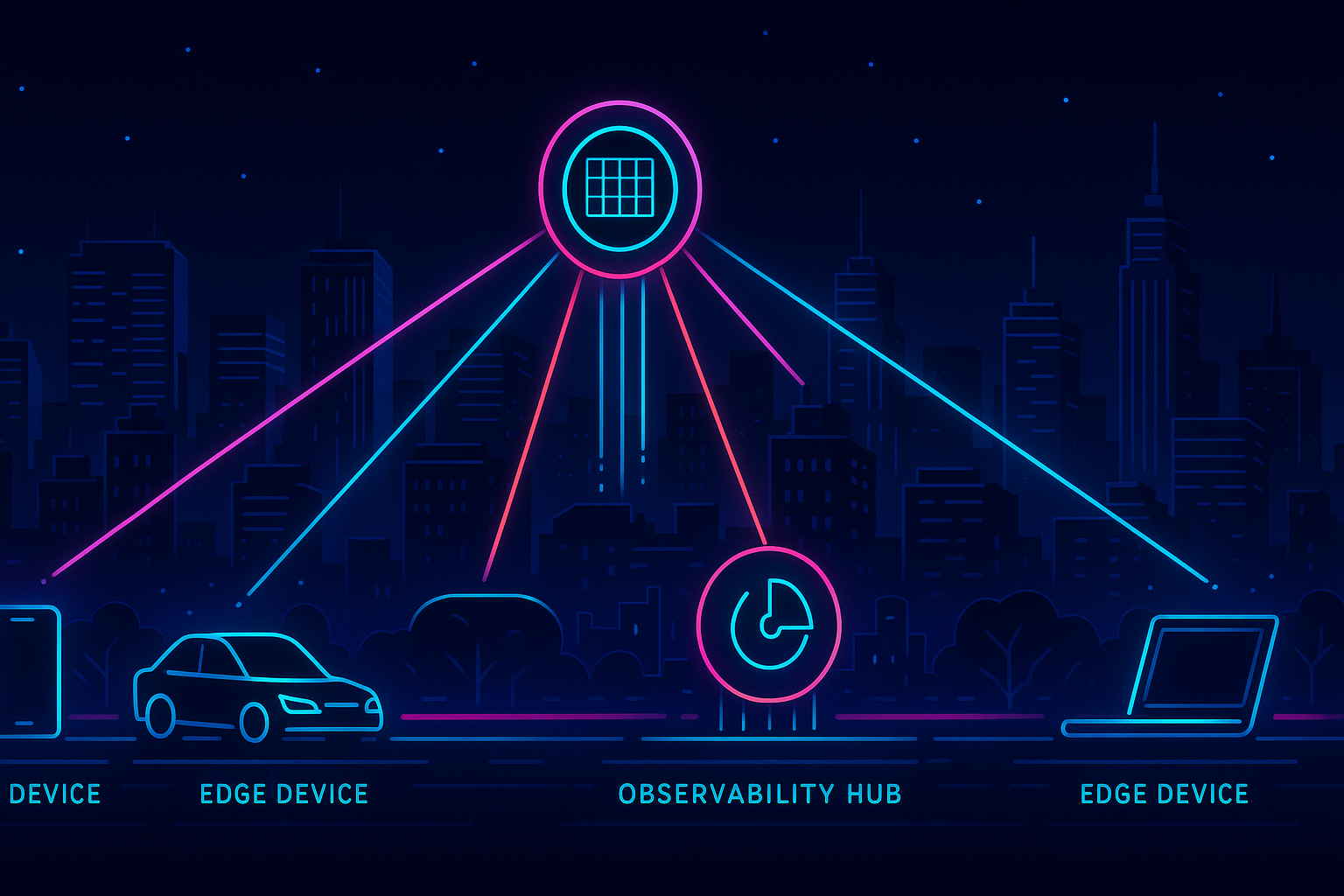

2. Designing observability architecture

We built a layered architecture:

- On-device telemetry agent: lightweight process collecting metrics, logs, traces, and model events.

- Edge gateway: local aggregator (per store/site) buffering data, enforcing policy, performing initial analytics.

- Cloud ingest: scalable endpoint receiving batched telemetry, normalizing formats, and storing in time-series and log databases.

- Observability hub: dashboards, alerting, forensic tools.

Agents communicate via gRPC over mTLS. When offline, they buffer to disk with backpressure controls.

3. Telemetry schema design

Edge telemetry includes new data types:

- Model inference summaries: counts, latency, confidence distributions, confusion matrix approximations.

- Input fingerprints: compressed representations (hashes) of sensor inputs for deduplication.

- Environmental metrics: temperature, humidity, power status.

- Connectivity stats: signal strength, packet loss, bandwidth usage.

- Lifecycle events: model version changes, firmware updates, maintenance events.

We defined schemas using Protocol Buffers, versioned carefully. Schema upgrades roll out with compatibility tests, ensuring old agents can still send minimalist payloads.

4. Privacy and data minimization

Edge devices capture sensitive data—video, transactions. We enforced minimization:

- Telemetry agents redact or hash PII before transmission.

- Video analytics send metadata, not raw frames, unless incident review requires samples (with consent workflows).

- Customers control telemetry granularity via policy (per site). We respect local regulations (GDPR, CCPA) by geofencing data storage.

We applied differential privacy to aggregated statistics when sharing across customers, ensuring no single device dominates metrics.

5. Metrics collection

Metrics include system health (CPU, GPU, memory), model performance (latency, accuracy proxies), and business KPIs (detections per hour). On-device collections use Prometheus-compatible exporters. Edge gateways run a Prometheus instance scraping local devices and storing data in TSDB with retention. When connectivity resumes, gateways ship metrics via remote write to cloud Prometheus, tagging with site metadata.

We keep local retention for seven days to support offline diagnosis. Operators can access gateway dashboards locally during network cut-offs.

6. Logging strategy

Logs capture both system events and model anomalies. We differentiate:

- Operational logs: OS events, hardware errors, service restarts.

- Model logs: input anomalies, threshold breaches, drift alerts.

To avoid log storms, we apply sampling and deduplication. Agents maintain log dictionaries, sending unique events with counters. Logs stream via Fluent Bit to gateways, where we run filters to enforce redaction policies. Cloud storage uses an ELK stack with partitioning by customer, device type, and severity.

7. Distributed tracing

Tracing at the edge required creativity. We instrumented inference pipelines with OpenTelemetry, capturing spans for sensor acquisition, pre-processing, model inference, and post-processing. Each span includes device ID, model version, and input characteristics. Because connectivity is intermittent, we buffer trace spans and send them in batches. We compress using zstd, target <1% CPU overhead.

In the cloud, we run Jaeger to visualize traces. We correlate device spans with cloud processing spans (e.g., event ingestion, alert generation) using shared trace IDs. This helps debug end-to-end latency toppers.

8. Model observability

Edge models drift due to environment changes. We track:

- Prediction distributions vs. training baseline.

- Confidence histograms to detect calibration shifts.

- Feedback loops: when human operators correct predictions, devices send labeled data snippets (anonymized) back.

- Data quality metrics: sensor noise levels, occlusion indicators.

We run drift detectors at gateways, raising alerts when PSI or KL divergence crosses thresholds. Gateways can trigger local mitigation: fallback to simpler models, request remote review, or adjust thresholds.

9. Health scoring

We built health scores combining metrics: connectivity, resource usage, model accuracy proxy, update freshness. Scores categorize devices (green/yellow/red). Dashboards show heatmaps of sites with aggregated health. Alerts trigger when clusters of devices degrade simultaneously, hinting at network or firmware issues.

10. Remote debugging tools

Observability isn’t enough without action. We developed remote debugging features:

- Live tailing: stream logs from specific devices with user consent.

- Snapshot capture: request diagnostic bundles (metrics snapshot, config, recent predictions). Bundles sign with device certificates and upload when bandwidth allows.

- Command execution: limited safe commands (restart service, run diagnostics) invoked via secure channel with audit logging.

Access requires MFA and role-based approvals to prevent misuse.

11. Edge analytics and anomaly detection

Gateways perform local analytics to reduce cloud load. We use lightweight ML models to detect abnormal behavior (e.g., sudden drop in detections). When anomalies occur, gateways tag telemetry with severity, enabling prioritization. Local analytics also decide whether to upload raw samples; only severe anomalies trigger raw data uploads for forensic review.

12. Firmware and model rollout observability

Updates caused many incidents historically. We now track rollout metrics:

- Percentage of devices updated (by version).

- Update success/failure reasons.

- Post-update health score deltas.

- Rollback counts.

A deployment dashboard visualizes progress. If failure rates exceed thresholds, automation pauses rollout and notifies engineers. Model rollouts log inference quality before/after to ensure gains.

13. Security monitoring

Edge environments are physically accessible. We log tamper events from enclosure sensors, unexpected USB insertions, and debug port access. Agents report security posture (firewall status, disk encryption). We integrate logs with SIEM, correlating with cloud events. We also monitor for telemetry anomalies that might indicate compromise (e.g., sudden spikes in CPU without traffic). Security alerts follow zero-trust principles—automatic containment (quarantine device) plus human review.

14. Scaling challenges

As fleets grew, telemetry volume exploded. We implemented adaptive sampling: critical metrics (health scores) always transmit; verbose logs sample when bandwidth tight. We added prioritization: incidents outrank routine metrics. Edge gateways compress data and schedule uploads during off-peak hours. In the cloud, we partitioned data storage by geography to respect data residency laws, using Kafka partitions to handle ingest spikes.

15. Incident response workflows

When incidents strike, our runbooks guide responders:

- Check fleet health dashboard for scope.

- Drill down into affected sites via map view.

- Inspect recent updates—model or firmware rollouts.

- Pull traces/logs from sample devices.

- Trigger remote diagnostics if needed.

- Communicate status to stakeholders via shared channel.

We track mean time to detect (MTTD) and mean time to resolve (MTTR). Observability improvements cut MTTD from hours to minutes.

16. Case study: lighting-induced drift

In one region, stores replaced lighting with warmer bulbs. Cameras misclassified product colors. Drift detectors flagged confidence drops. Dashboard showed cluster of stores with health scores red. Remote diagnostics confirmed lighting change. We retrained models with new lighting data, deployed update within 48 hours, and observed confidence recover. Without observability, we might have blamed network issues.

17. Case study: bandwidth-constrained logistics hubs

Logistics hubs experienced intermittent connectivity. Edge gateways buffered telemetry until nightly uploads. Observability pipeline detected backlog growth, alerting operators. We adjusted telemetry scheduling to send critical health metrics over satellite fallback links and delayed verbose logs. Incident response team gained visibility even during outages.

18. Developer experience

Developers interact through an observability portal. Features include:

- Querying metrics/logs by device, model version, region.

- Creating alerts templates (e.g., “model latency > 250 ms for 5 minutes”).

- Visualizing traces and comparing to historical baselines.

- Exporting data for offline analysis.

We built SDKs to instrument models easily. Developers annotate inference code with macros that emit standardized spans and metrics. CI pipelines run linting to ensure instrumentation present.

19. Customer transparency

Customers view fleet health through a self-service dashboard. They see device status, recent incidents, and scheduled maintenance. We expose controls to adjust telemetry granularity and opt into auto-updates. Transparency builds trust and reduces support tickets.

20. Lessons learned

- Offline-first design: assume networks fail; buffer and sync intelligently.

- Telemetry governance: privacy and minimization must be baked in.

- Local analytics: edge gateways add resilience and reduce cloud dependency.

- Visualization matters: map-based dashboards help non-technical stakeholders grasp issues.

- Culture: observability is everyone’s job—modelers, SREs, and field ops share responsibility.

21. Future directions

We explore federated analytics to compute metrics across devices without raw data leaving premises. We test WASM-based agents enabling hot updates. We experiment with synthetic monitoring robots that mimic device behavior for proactive alerting. And we collaborate with hardware vendors to embed observability primitives (secure enclaves exposing health counters).

22. Conclusion

Edge AI must see itself clearly to stay trustworthy. By investing in observability tailored to harsh environments, we respond faster, deploy safer, and sleep better. The bananas-to-tennis-balls incident became a legend we retell when onboarding new engineers. It’s a reminder that curiosity—asking “why did this happen?"—is the compass guiding us through the edge’s twilight.