The Hidden Backbone of Parallelism: How Prefix Sums Power Distributed Computation

Discover how the humble prefix sum (scan) quietly powers GPUs, distributed clusters, and big data frameworks—an obscure but essential building block of parallel and distributed computation.

Introduction

When most people think about parallel computing, they imagine splitting a massive task into smaller chunks and running them simultaneously. That’s the Hollywood version: thousands of processors blazing through data, crunching numbers in parallel, and finishing jobs in seconds.

The reality is both more fascinating and more subtle. Beneath the surface of supercomputers and distributed systems lies a set of seemingly modest mathematical operations—building blocks that make large-scale parallelism possible. Among these, one stands out for its simplicity and profound impact: the parallel prefix sum, also called a scan.

This operation, hidden in the guts of compilers, GPU frameworks, and big-data systems, is one of the unsung heroes of parallel and distributed computation. Today, we’ll peel back the layers to uncover why prefix sums matter, how they enable scalable algorithms, and what makes them surprisingly tricky in distributed environments.

The Prefix Sum: A Simple Definition

At its core, a prefix sum (or scan) is a transformation applied to an array.

Given an array:

[3, 1, 4, 2, 5]

The prefix sum produces:

[3, 4, 8, 10, 15]

Each element is the sum of all previous elements, including itself. That’s it—simple enough to explain to a first-year CS student.

But here’s the kicker: this trivial-looking operation underpins a staggering range of algorithms, from memory allocation in GPUs to graph traversal and string processing. In fact, Guy Blelloch’s classic paper in the 1990s established prefix sums as one of the foundational primitives of parallel computation—right alongside sorting and matrix multiplication.

Why Prefix Sums Matter in Parallel Systems

Why do we care so much about a glorified running sum? The answer lies in dependency structure.

- Many algorithms require knowing “how many things came before me.”

- Others need to compact data efficiently (e.g., filtering out unwanted elements in an array).

- Graph algorithms like breadth-first search (BFS) rely on quickly computing offsets for neighbors.

In serial code, this is trivial: a single loop walks through the array, carrying forward a running total. But in parallel, this sequential dependency becomes a bottleneck. Naively, it seems you can’t compute the 5th prefix sum until you know the 4th, the 4th until you know the 3rd, and so on.

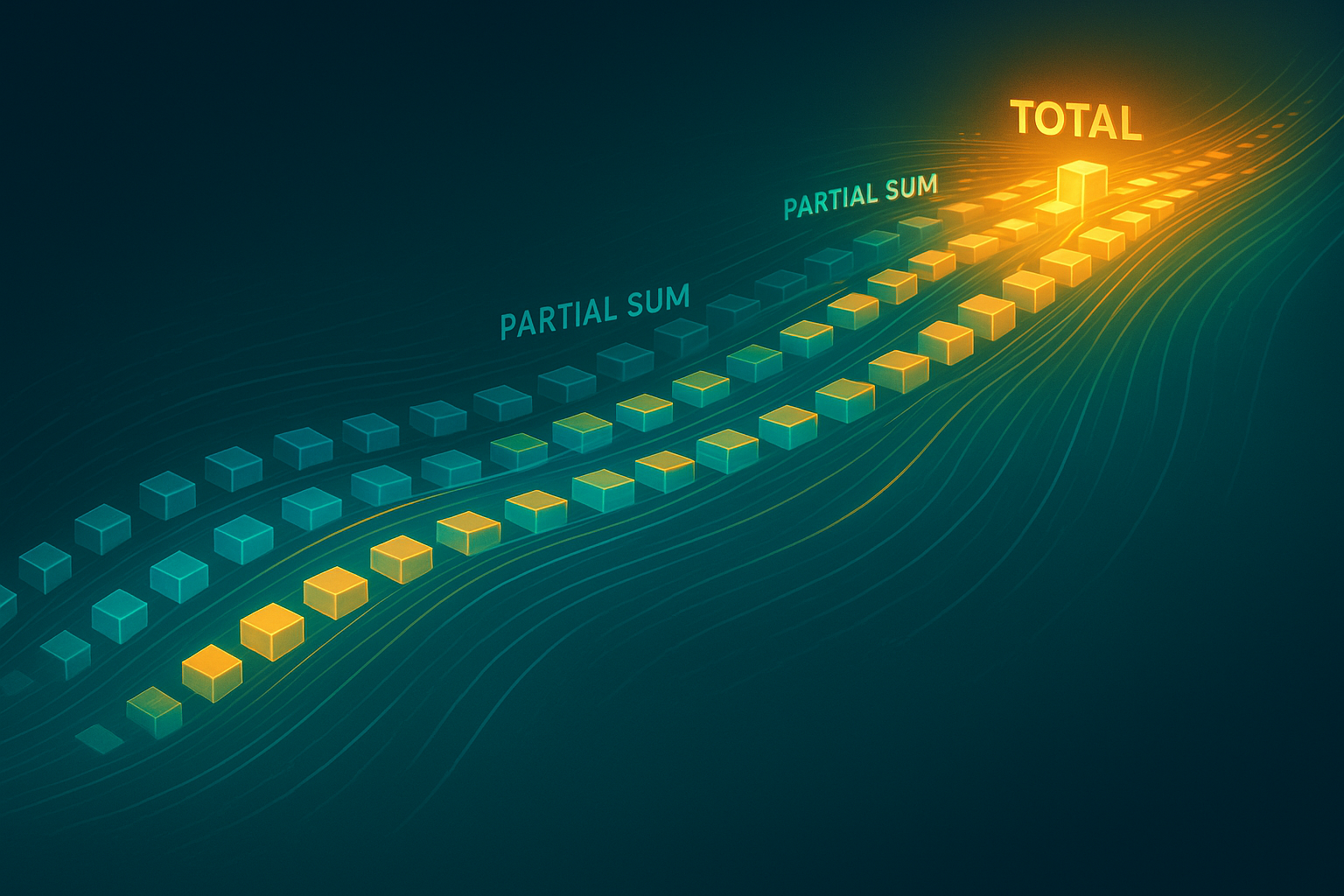

The brilliance of parallel prefix algorithms is that they break this dependency chain by restructuring the computation into a tree of partial sums. Instead of a linear (O(n)) bottleneck, prefix sums can be computed in (O(\log n)) depth with (O(n)) work—making them scalable to thousands or millions of processors.

Blelloch Scan: The Classic Algorithm

Let’s zoom in on the Blelloch scan, the canonical parallel algorithm. It has two phases:

- Upsweep (reduce): Build a tree of partial sums. Think of processors combining pairs of elements, then pairs of pairs, and so on, until you have a single root sum.

- Downsweep: Traverse back down the tree, propagating prefix information so each node learns the correct prefix value.

Visually, it looks like a binary tree growing and then folding back on itself.

- Work: (O(n))

- Depth: (O(\log n))

This efficiency is why prefix sums are so central to parallel programming libraries like CUDA Thrust, OpenMP, and MPI.

Distributed Prefix Sums: When Communication Becomes the Bottleneck

On a single multicore CPU or GPU, prefix sums are relatively well-understood. But once we scale out to distributed systems—clusters of machines communicating over a network—things get tricky.

The Challenge of Distribution

Each machine holds a chunk of the data. Computing local prefix sums is easy, but stitching them together requires communication:

- Each node computes prefix sums on its chunk.

- Each node needs the total sum of all previous nodes to adjust its values.

- This requires exchanging data across the cluster, introducing latency and synchronization.

Now, the cost of network communication dominates. What was once a simple (O(\log n)) algorithm becomes bottlenecked by bandwidth and latency constraints.

This is where distributed systems researchers obsess over collective communication primitives. MPI, the Message Passing Interface, implements prefix sums as the operation MPI_Scan. It’s no accident: prefix sums are so critical they deserve a built-in primitive.

Real-World Applications

Prefix sums pop up in surprising places:

- Memory Management in GPUs: When allocating memory for variable-sized data, prefix sums determine offsets so each thread knows where to write.

- Compaction & Filtering: Removing invalid elements from a dataset requires knowing the “new index” for valid ones, computed via scan.

- Parallel Sorting: Radix sort, a highly parallel algorithm, relies heavily on prefix sums for bucket indexing.

- Graph Analytics: Traversing adjacency lists in BFS or PageRank requires offset computations driven by scans.

- Big-Data Frameworks: Systems like Spark and Hadoop use variants of prefix sums in shuffle operations and cumulative aggregations.

Without prefix sums, parallel systems would grind to a halt, bottlenecked by the need to coordinate work across processors.

Obscure Mathematical Insights

Here’s where things get even more interesting. The prefix sum is just one instance of a broader class of operations: parallel prefix operations.

Formally, given an associative binary operator ⊕, the prefix computation produces:

[ [y_0, y_1, y_2, \dots, y_{n-1}] ]

where

[ y_k = x_0 ⊕ x_1 ⊕ \dots ⊕ x_k ]

The key requirement: associativity.

- Works: addition, multiplication, min, max, gcd, bitwise operations.

- Doesn’t work: subtraction, division (non-associative).

This abstraction opens up a universe of algorithms: parallel prefix for computing factorials, gcd scans for cryptographic preprocessing, or even “max-prefix” operations for load balancing in distributed queues.

In fact, prefix sums form the backbone of what’s called a parallel prefix circuit, a structure studied extensively in theoretical computer science. Some of the fastest adders in CPUs (like the Kogge-Stone adder) are, at heart, parallel prefix networks.

Lessons from Supercomputing

On supercomputers, prefix sums highlight a constant trade-off: latency vs throughput.

- GPUs optimize for throughput: thousands of threads hiding memory latency.

- Distributed clusters optimize for reducing communication rounds: clever tree-based algorithms minimize how often nodes must talk.

Research in this space is ongoing. For example, hybrid algorithms combine local scans with inter-node reductions, overlapping computation with communication to squeeze out more performance. Others exploit hierarchical topologies: optimize within a node, then within a rack, then across racks.

The Obscurity Factor

So why is this topic obscure, despite being so foundational? Because prefix sums are almost always hidden from end-users.

- When you call

thrust::exclusive_scanin CUDA, you don’t think about the two-phase tree traversal behind it. - When Spark executes a

cumulativeSum()on a dataset, you don’t see the MPI-style communication happening across the cluster. - When a CPU executes an integer addition, you don’t realize that a parallel prefix adder is sitting at the hardware level.

Prefix sums are like plumbing: invisible but indispensable. They’re the connective tissue that makes higher-level abstractions work.

The Future: Beyond Sums

Researchers are pushing prefix operations into new domains:

- Probabilistic Data Structures: Prefix scans in sketching algorithms for streaming data.

- Machine Learning: GPU tensor libraries use scans for dynamic batching and sparse matrix operations.

- Quantum Computing: Even proposed quantum algorithms include prefix-style accumulations for state preparation.

As systems grow ever more parallel, from thousands of cores on a chip to millions of processes in a data center, the humble prefix sum will only grow more important.

Conclusion

The parallel prefix sum is one of those rare concepts in computer science that looks trivial but shapes the very foundations of parallel and distributed computation. From supercomputers to GPUs, from Spark jobs to CPU adders, it quietly enables scalability by breaking dependencies and orchestrating order across chaos.

If you’ve ever filtered data on a GPU, trained a neural network, or run a distributed sort on terabytes of logs, chances are a prefix sum was working behind the scenes—unsung, unnoticed, but indispensable.

The next time you marvel at parallelism, remember: beneath the flash of thousands of processors, there’s often a simple scan, summing away in silence.