The Quiet Calculus of Probabilistic Commutativity

A practical calculus for quantifying when non-commutative operations in distributed systems can be safely executed without heavyweight coordination.

Abstract

Eventual consistency dominates many internet-scale systems, but reasoning about concurrency under minimal coordination remains ad hoc. This post introduces “probabilistic commutativity” — a lightweight calculus for reasoning about whether concurrent operations, under reasonable stochastic assumptions about ordering and visibility delays, are likely to commute in practice. Probabilistic commutativity offers an intermediate lens between strict algebraic commutativity and empirical test-driven guarantees, enabling low-overhead coordination strategies and probabilistic correctness arguments for producing practically consistent distributed services.

1. Introduction

Designing distributed systems with low latency and high availability often forces engineers to relax strict consistency guarantees. Eventual consistency, CRDTs, and causal consistency are standard tools, but they come at a cost: operational complexity, added metadata, or coordination overhead. When the cost of strict correctness is high, practitioners often accept “good enough” behavior supported by engineering safeguards and probabilistic observations — but this approach lacks a concise language to reason about why and when it’s acceptable.

This post proposes a formal yet practical concept: probabilistic commutativity. The core idea is to quantify the likelihood that two operations commute given a stochastic model of message delays, concurrency windows, and operation semantics. If two operations commute with high probability under the target deployment regime, then the system can avoid heavy coordination between them and remain correct with quantifiable risk.

The post walks through the intuition, a minimal calculus, a worked example applied to counters and append-only logs, a simple evaluation recipe, and limitations and directions for future work. I’ll keep the math light and the focus practical — think “medium-style academic” rather than a dense journal article.

2. Background and motivation

Commutativity is central to low-coordination distributed algorithms. If operations commute, their order doesn’t matter; replicas can apply them in any order and reach the same state. CRDTs exploit algebraic commutativity (or semi-lattice joins) to provide deterministic convergence without coordination. Yet algebraic commutativity is strict and often impossible for interesting operations: consider bank transfers, conditional updates, or unique-name allocation.

On the other hand, engineers often observe that many non-commutative operations effectively commute in real deployments because of the rare overlaps of conflicting windows, skewed workloads, or natural causal chains. But “effectively commute” is informal; it lacks a composable language for reasoning. Probabilistic commutativity fills this gap by supplying:

- A definable probabilistic model for concurrency and visibility.

- An analytical criterion (or approximation) that returns the probability two operations’ outcomes diverge when applied in different orders.

- Rules for composing probabilities across sequences of operations, with conservative bounds.

This calculus is not a replacement for algebraic CRDT design or strong transactional guarantees. It is a complementary reasoning tool: when the computed risk of divergence is negligible, one might choose lightweight techniques (retries, anti-entropy, last-writer-wins with tombstones) rather than heavy coordination.

3. Model and definitions

We work with a simplified asynchronous model familiar from distributed systems:

- Replica set: a finite set R of replica nodes, each applying operations to its local state.

- Operation o: an abstract transformation from state to state. For simplicity, treat most operations as deterministic functions on state and side effects (e.g., appending, incrementing).

- Visibility delay: when an operation is issued at replica A, it becomes visible to replica B after a random delay D_AB sampled from a distribution D (which may depend on network conditions, routing, or system configuration).

- Concurrency window: two operations o1 at time t1 and o2 at time t2 are concurrent for a replica B if each operation’s visibility to B occurs after the other is issued; formally, v1 > t2 and v2 > t1 where v1, v2 are visibility times to B.

- Commutativity under orderings: Two operations o1 and o2 commute on a state s if applying o1 then o2 equals applying o2 then o1.

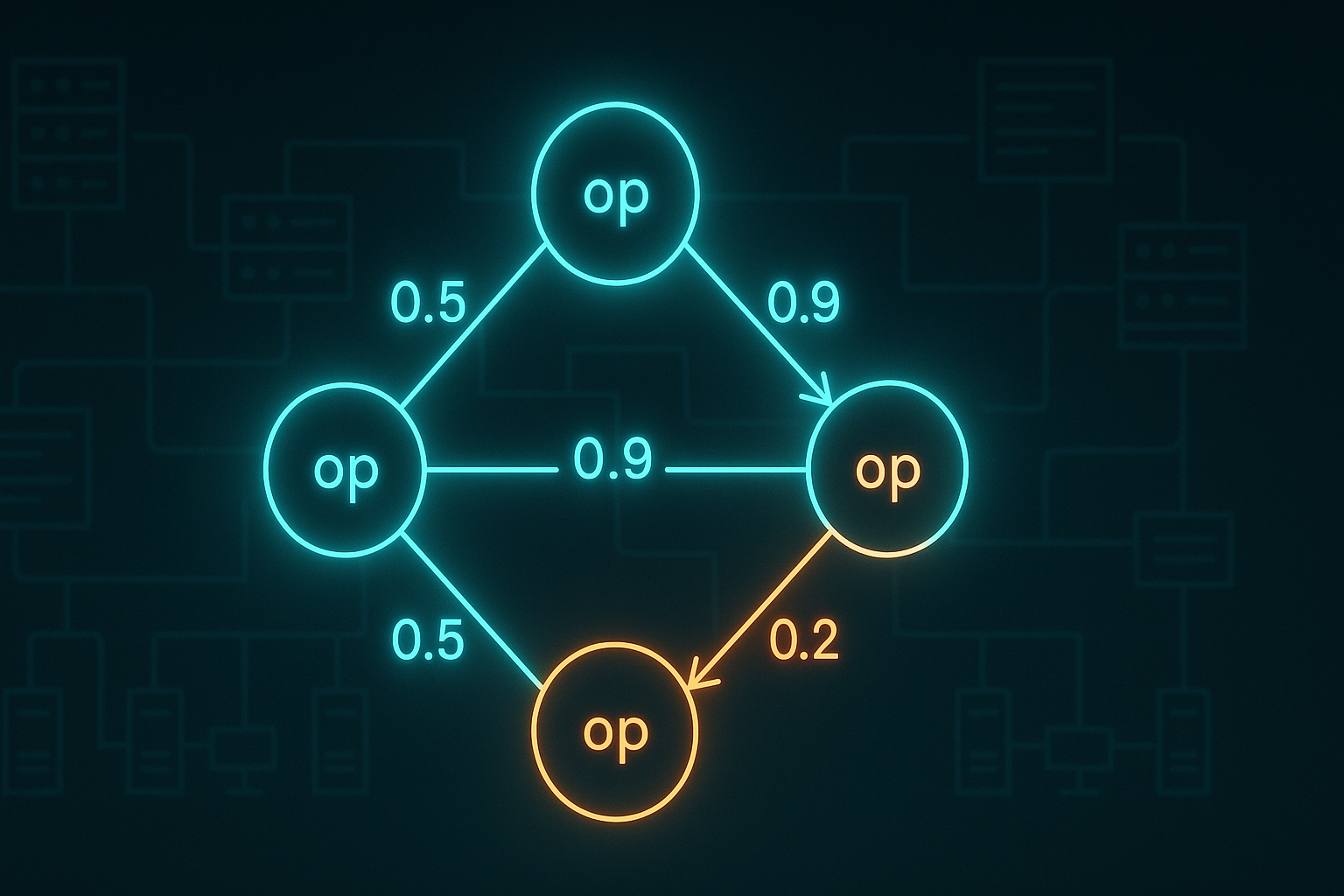

Probabilistic commutativity focuses on the distribution over visibility permutations and, for multi-replica systems, the vector of visibilities to each node. Let V denote the random vector of visibilities for {o1,o2} across replicas.

Definition (probabilistic commutativity). Given a pair of operations (o1, o2), a prior distribution on their issuance times and visibility delays, and an initial state distribution S, define P_commute(o1,o2) to be the probability that all replicas end up in the same state regardless of the order in which o1 and o2 are delivered and applied under the modeled visibilities.

Concretely: Pcommute = Pr{S,V}[forall replicas r: state_r(o1→o2) = state_r(o2→o1)]

This includes subtle differences: if replicas apply operations in different orders but anti-entropy reconciles them to the same state later, that reconciliation is part of the model. The calculus therefore must reason about not only local application but also the convergence mechanisms in place.

4. Minimal calculus

We propose a compact stepwise approach to compute or bound P_commute for common patterns:

Operation semantics summary: categorize operations into simple types:

- Idempotent-commutative: e.g., set-to-1 (with monotone semantics), commutative only under join semantics.

- Additive: increments to counters (may not commute with conditional subtracts).

- Conditional: reads and then updates (e.g., compare-and-set).

- Append-only: logs and causal sequences.

Visibility and issuance model: assume issuance times t1,t2 and visibility delays D sampled (possibly independently) from distributions. Often a simple parametric family (exponential or lognormal) suffices to capture tail behavior.

Per-replica ordering probability: for each replica r, compute p_r = Pr[state_r differs when applying o1→o2 vs o2→o1]. For basic cases p_r reduces to the probability that both operations are concurrent at r and their semantics produce divergent outcomes.

System-level composition: conservative union bound yields P_diverge ≤ sum_r p_r. For replicas that reconcile deterministically (e.g., merge via a CRDT), the effective p_r is reduced; for gossip-based anti-entropy, consider the probability the divergence survives until an external observation (user read) or causes incorrect external side effects.

Tailoring to read semantics: if the user reads from one replica, you can replace the sum over replicas with the single p_r for the read replica. For quorum reads, adapt accordingly.

Example analytic patterns:

- Two independent increments on a counter: P_commute = 1 (they commute).

- Increment and conditional-decrement-if-positive: reduce to the probability the increment arrives before the conditional check at the target replica; compute via distributions of D and t spacing.

5. Worked examples

Example 1: increment vs conditional-reset

Consider a key that supports increment (inc) and reset-if-zero (reset_if_zero: set to 0 only if current value is 0). inc and reset_if_zero are non-commutative: if reset runs on a stale replica that doesn’t see inc, it may set the value to 0, erasing inc. But in many operations workloads, inc happens orders of magnitude more frequently than resets and network delays are short; then P_commute could be close to 1.

Assume:

- Single writer model for both ops, issued at t1 and t2 with Δ = t2 − t1.

- Visibility delays to a read replica are iid Exp(λ). For replica r:

- inc visible at v1 = t1 + D1

- reset visible at v2 = t2 + D2

- Divergence at r happens if reset executes when v2 < v1 and reset’s local read sees 0. Compute Pr[v2 < v1] = Pr[D2 − D1 < t1 − t2] given Δ and D pdfs. For symmetric exponential delays, closed forms exist.

This yields a simple formula linking workload spacing Δ and delay tail λ to divergence probability. Systems with moderate Δ and small λ (fast networks) will have vanishing divergence probability.

Example 2: unique-name allocation via optimistic reservation

Suppose two clients attempt to claim unique slugs by performing read-if-empty then write. Conflicts are rare when issuance windows are disjoint. Probabilistic commutativity quantifies the chance of both writes succeeding in different replicas and later producing duplicate names observed by a reader. With anti-entropy and a uniqueness-check during user-visible read, the risk can be bounded and mitigated cheaply (retry, ephemeral blocking).

Example 3: append-only log with idempotent deduplication

Append-only logs are commonly treated as commuting. But operations that append objects with non-globally-unique IDs can cause visible duplication in reads until deduplication completes. Model the deduplication window (time until all replicas observe and dedup), compute the probability a client will observe duplicates given read routing, and use that to select deduplication strategies.

6. Practical recipe for engineers

When you have a pair (or small set) of operations and want to decide whether to coordinate:

- Classify operation types and semantics. Write down the divergence condition (what outcome difference would be considered an error).

- Estimate issuance spacing Δ from traces (median and tail).

- Measure or assume visibility delay distribution D (median + tail).

- Compute per-replica p_r (often a simple tail integral).

- Compose conservatively for system-level exposure (sum or max depending on read model).

- Translate probability into an operational mitigation decision:

- If P_diverge < ε (e.g., 1e-6), avoid coordination and rely on lightweight retries or compensating actions.

- If P_diverge is borderline, add cheap mitigations: single-read-after-write, local fencing, short leases, or weak quorum checks.

- If P_diverge is unacceptable, use stronger coordination: consensus or linearizable operations.

7. Short evaluation sketch

A compact empirical evaluation can demonstrate the utility of probabilistic commutativity:

- Setup: a small cluster (3–5 replicas) deployed in a cloud region with controlled network delay injection and a workload generator that emits paired operations with tunable Δ.

- Metrics:

- Observed divergence rate per operation pair.

- Time until convergence (anti-entropy latency).

- User-visible error rate under different read strategies.

- Experiments:

- Vary Δ across orders of magnitude.

- Vary network tail by injecting delay distributions (simulate cross-region vs intra-region).

- Compare against baselines: pessimistic locking, CRDT-based resolution, and no-coordination with retries.

Expected findings: many pairs that are algebraically non-commutative will show divergence probabilities low enough to justify coordination avoidance under typical intra-region conditions, but cross-region latencies increase risk significantly.

8. Limitations and caveats

- Assumptions drive results. Bad model choices (e.g., wrong visibility delay tails) can grossly understate risk.

- Probabilistic guarantees are not safety guarantees. Systems where correctness is legally or financially critical should not rely on probabilistic commutativity for core invariants.

- Composability is tricky. Extending pairwise reasoning to large sequences requires careful composition rules and conservative bounds.

- Attack surface: adversarial clients can force worst-case issuance patterns; use authentication, rate-limiting, or server-side validation to harden.

- Measurement burden: you must instrument issuance timestamps and visibility latencies, which may be non-trivial in multi-tenant services.

9. Related work and intellectual context

Probabilistic reasoning in distributed systems has precedents: probabilistic quorums, probabilistically bounded staleness, and systems that model tail latencies (PPr, PBS). The novelty here is packaging a lightweight calculus targeted at commutativity decisions, rather than full correctness proofs. It sits between algebraic CRDT work (Shapiro et al.) and operational analytics like PBS (Bailis et al.).

10. Conclusion and next steps

Probabilistic commutativity does not replace formal correctness but provides a practical, quantifiable tool for engineering trade-offs in latency-sensitive systems. It empowers teams to defer heavyweight coordination where risk is quantified and mitigated. Future directions include:

- Building a small library that computes p_r for common operation patterns (counter+conditional, optimistic-unique-claim, append+dedupe).

- Integrating the toolkit with observability pipelines to continuously re-evaluate P_commute under changing traffic and network conditions.

- Combining with policy-based mitigations (e.g., auto-escalate to coordination under detected elevated divergence risk).

If you’d like, I can produce a runnable notebook that computes P_commute for the inc vs reset example with configurable delay distributions.